Artificial Intelligence - ML Operations

Overview

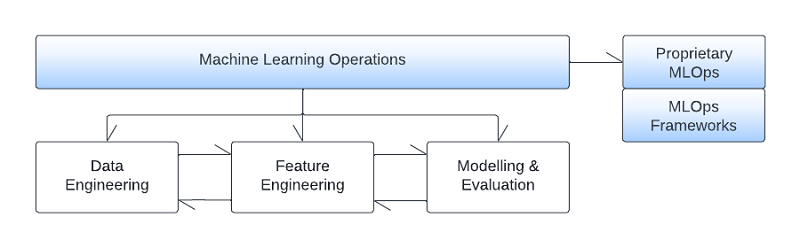

We define as Machine Learning Operations any process in an environment where AI assets are treated jointly with all other software infrastructure assets in a continuous improvement context. It is a set of methodologies that ensure efficient and reliable deployment and maintenance of AI solutions in production. MLOps is not a separate phase of the pipeline, rather it exists from its very beginning and follows the lifecycle of the project up-to and after productionisation. MLOps involve concepts such as iterative development and continuous integration, training, deployment, and improvement. Versioning as well as reproducibility and monitoring are also important parts of MLOps. Following MLOps best practices, significantly contributes to decreasing technical debt accumulation during the Artificial Intelligence project lifecycle.

Automation

The end goal of MLOps is fully automating the entire Artificial Intelligence pipeline, from its data engineering phase to deployment and productionisation of the AI models. Automated triggers in place, can respond to various events happening at any phase of the pipeline. The response can range from partial to full re-instantiation of the pipeline. Furthermore, a complete lineage of the entire process is generated, allowing governance of any change and its effects on the performance of the system.

Implementing fully automated MLOps is an iterative process during which, parts of the pipeline are gradually automated through appropriate orchestration. With time, a fully robotic pipeline is delivered able to build, train, test, deploy and monitor the entire AI system.

Lineage - Reproducibility

Continuous experimentation in AI is a natural process where research-centric practices, such as multiple experiment settings are being tested. A proper MLOps environment should be able to support lineage tracking of the experiment process including the steps, parameters, hyperparameters, versions of datasets, model cores and environment leading to the production pipeline. Reproducibility and versioning are key and can lead to important team velocity increase during building the pipeline.

MLOps solutions

Machine Learning Operations have several phases with specific deliverables that are expected including:

- MLOps design: This phase starts in parallel with the initial designs of the AI pipeline and includes software engineering stages such as business understanding, requirements, available resources, infrastructure, and availability of the required datasets.

- Experimentation and development: During this phase, orchestration automations on the entire AI pipeline are built to enable data ingestion and preparation as well modelling and evaluation of the AI components.

- Continuous Integration, Delivery and Monitoring: The results of this phase include deployable pipeline components, packaging, and inference services, pipeline building trigger components and metrics collection on AI live behaviour and performance.

There are numerous software frameworks available that can aid in implementing a full blown MLOps AI environment. The list is continuously evolving, and solutions can be divided to proprietary architectures and major vendor solutions. The former, use individual components that enable code versioning, artifact and model storage in registries, orchestrators, and monitoring tools as well as micro-service containers and service implementation tools. The later, deliver complete MLOps environments for AI pipelines both in-premises, on the Cloud and hybrid architectures. Examples of major vendor MLOps solutions include AWS Sagemaker, Azure MLOps, Google Cloud and Tensorflow MLOps. The Blueprints included in this use case page cover both types of such environments that our clients can deploy as per their needs.